This blog belongs to the series about intelligent system design. You might also be interested in my previous blog, 5 Challenges to Your Machine Learning Project.

One of the guiding design principles for intelligent systems is to empower end users. If we want people to trust machines, we must share information about the underlying models and the reasoning behind the results of algorithms.This is even more vital for business applications, when users are held accountable for every decision they make.

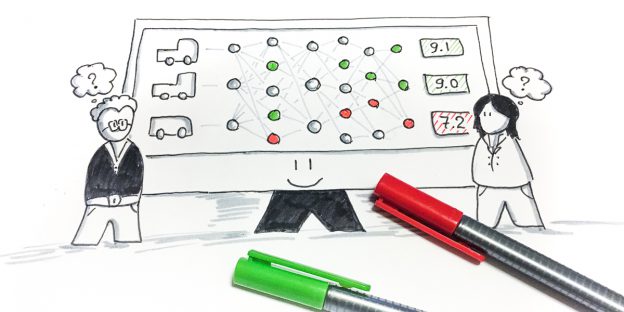

It’s widely accepted that intelligent systems must come with a certain level of transparency. There’s even a new term for it: explainable AI. But, that’s just the beginning. As designers, we need to ask ourselves how explainable AI is tied to user interaction. What do we need to think about when we explain the results and recommendations that come from built-in intelligence? And how can we make it a seamless experience that feels natural to users?

My next article about Explanaible AI and its UX.